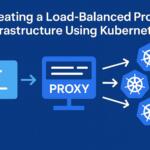

Load balancing is a crucial technique for distributing incoming network traffic across multiple servers. It helps ensure high availability and reliability by preventing any single server from being overwhelmed by too much traffic. In this article, we will explore how to configure HAProxy as a reverse proxy to efficiently load balance HTTP traffic.

What is HAProxy?

HAProxy (High Availability Proxy) is a popular open-source software solution for load balancing and proxying. It provides high availability, load balancing, and proxying for TCP and HTTP-based applications. It is highly regarded for its performance, flexibility, and ability to handle complex configurations.

HAProxy as a Reverse Proxy

A reverse proxy sits between the client and the server, intercepting requests from clients and forwarding them to one or more backend servers. By using HAProxy as a reverse proxy, you can direct HTTP requests to different servers, making your web application scalable and resilient to failure.

Setting Up HAProxy

To set up HAProxy for load balancing HTTP traffic, first, ensure that you have HAProxy installed on your server. On a Debian-based system, you can install it using the following command:

sudo apt-get update

sudo apt-get install haproxy

After installation, configure HAProxy by editing its configuration file located at /etc/haproxy/haproxy.cfg.

HAProxy Configuration Example

Below is an example of a basic HAProxy configuration for load balancing HTTP traffic across multiple backend servers.

# Global settings

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

user haproxy

group haproxy

daemon

# Default settings

defaults

log global

option httplog

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

# Frontend definition: Accepts HTTP traffic on port 80

frontend http_front

bind *:80

acl url_is_static path_end .jpg .jpeg .png .css .js

use_backend static_servers if url_is_static

default_backend app_servers

# Backend definition: Load balancing for the application servers

backend app_servers

balance roundrobin

server app1 192.168.1.10:80 check

server app2 192.168.1.11:80 check

# Backend definition: Static file servers

backend static_servers

balance roundrobin

server static1 192.168.1.12:80 check

server static2 192.168.1.13:80 check

Explanation of Configuration

Global settings: These settings define log parameters, chroot, and the user and group under which HAProxy will run.

Defaults section: The default settings for logging and timeouts are defined here.

Frontend definition: The frontend section listens on port 80 and uses the acl directive to classify requests based on their URL. Requests that are static files (like images, CSS, or JavaScript) are sent to the static_servers backend, while other requests go to the app_servers backend.

Backend definition: The backend sections define the servers that will handle the requests. We use the roundrobin load balancing method, which distributes requests evenly among the available servers.

Load Balancing Algorithms in HAProxy

HAProxy supports several load balancing algorithms. The most common ones are:

- Round Robin: Distributes traffic evenly across all backend servers. This is the default algorithm.

- Least Connections: Sends traffic to the backend with the least number of active connections. Useful when some servers have different performance characteristics.

- Source IP Hash: Routes requests from the same client IP to the same backend server, ensuring session persistence.

- URI Hash: Routes requests based on the URI, which is useful for sharding content.

Session Persistence (Sticky Sessions)

In some scenarios, it is necessary to route requests from a specific client to the same backend server to maintain session state. This is called session persistence or sticky sessions. HAProxy can achieve this through the use of cookies or source IP address hashing.

Here’s an example of session persistence using cookies:

backend app_servers

balance roundrobin

cookie JSESSIONID insert indirect nocache

server app1 192.168.1.10:80 check cookie app1

server app2 192.168.1.11:80 check cookie app2

In this example, HAProxy inserts a cookie (JSESSIONID) in the client’s browser, which is used to identify the backend server for subsequent requests.

Health Checks and Failover

HAProxy continuously checks the health of backend servers to ensure that traffic is only routed to healthy servers. The check option in the backend configuration tells HAProxy to check each server’s health before routing traffic to it.

backend app_servers

balance roundrobin

server app1 192.168.1.10:80 check

server app2 192.168.1.11:80 check

If a server fails a health check, HAProxy will stop sending traffic to that server until it becomes healthy again.

Scaling and High Availability

For high availability, you can run multiple HAProxy instances behind a load balancer. This configuration ensures that if one HAProxy instance fails, another can take over. Additionally, combining HAProxy with a shared session store or database ensures session consistency across HAProxy instances.

Here’s an example of running HAProxy in a high availability setup using Keepalived for failover:

# Keepalived configuration for HAProxy failover

virtual_ipaddress {

192.168.1.100

}

In this setup, Keepalived assigns a virtual IP address to the active HAProxy instance, which will be reassigned to another HAProxy instance in case of failure.

Conclusion

HAProxy provides a robust and efficient solution for load balancing HTTP traffic in high-traffic web applications. By configuring it as a reverse proxy, you can achieve both scalability and reliability, ensuring that your services remain available even under heavy load.

We earn commissions using affiliate links.